Stand up for the facts!

Our only agenda is to publish the truth so you can be an informed participant in democracy.

We need your help.

I would like to contribute

Twitter labeled Donald Trump's "STOP THE FRAUD" tweet as "disputed" (Shutterstock)

If Your Time is short

-

Major social media platforms have policies that prohibit spreading falsehoods about elections. But their enforcement of these policies varies and is hard to track.

-

Some platforms have newsworthiness or public-interest exceptions that allow high-profile users to ignore guidelines.

-

Advocates are calling for platforms to take proactive steps to limit election misinformation and haven’t been satisfied with the response. Misinformation is still evident across the major platforms.

Online misinformation about the 2020 election drove all sorts of real-world consequences, from the Jan. 6 attack on the U.S. Capitol to the then-president of the United States getting banned from Twitter.

Those false claims that the last election was stolen haven’t gone away. Instead, they’re driving new campaign messaging for the 2022 midterm races through ads, videos and misleading memes.

As we reviewed the rules for false claims about elections and voting on social media, we found that determining what gets removed, what gets labeled and what gets downgraded isn’t straightforward. Every platform is different, and their policies aren’t always clearly outlined. Even when policies are clear, platforms may still shift them quickly without making the changes obvious to users.

"One of the things that we see is sometimes platforms go into an election with particular policies, and then a new and novel tactic or dynamic emerges that they didn’t necessarily have a policy for,'' said Renée DiResta, research manager at the Stanford Internet Observatory, a lab specializing in social media information.

Lessons from the last presidential election may not apply to the midterms coming up. After the 2016 election, for example, Russian leaders intervened through propaganda and cyberattacks, so social media companies prepared for that familiar fight. But in 2020, many false claims came from Americans themselves.

"Much of the misinformation in the 2020 election was pushed by authentic, domestic actors," according to the Long Fuse, an extensive analysis of misinformation on social media in 2020.

Platforms had to change their game plans — quickly.

Keeping that context in mind, we talked to researchers, industry experts and the platforms to try to determine what kind of social media monitoring is likely to happen this election season.

What platforms publicly say they do to tackle election-related falsehoods

Platforms generally respond to misinformation with a mix of three tactics that includes removing content, reducing its visibility or providing additional context.

Twitter says it reserves the right to label or take down content that confuses users about their ability to vote. This includes wrong information about polling times or locations, vote counting, long lines, equipment, ballots, election certification and any other parts of the election process. This also includes politicians saying that they’ve won before election results are certified and fake accounts posing as politicians. Twitter’s policy does not include falsehoods about politicians, political parties or anything that people could find "hyperpartisan" — unless it explicitly relates to voting. Twitter also hasn’t had political ads since 2019.

Facebook says it will remove content that "misrepresents who can vote, methods for voting, or when or where people can vote." It labels content fact-checked as false by fact-checkers (like PolitiFact), attaching fact-checks to those claims. Direct speech from a politician and political parties — including campaign ads — remains exempt. Ads must confirm that they’re coming from a real source, have a "paid for by" disclaimer and go into a public and searchable Ad Library for seven years. Facebook also has a penalty system for accounts, pages, groups and websites that demotes content that fact-checkers rate false and a strike system for violating community guidelines.

YouTube says it doesn’t allow falsehoods related to voting, candidate eligibility, or election integrity for past U.S. presidential elections. It has a "three-strikes" system; if someone has violated Community Guidelines three times in 90 days, that person’s (or group’s) channel is removed.

TikTok says it removes content that "erode(s) trust in public institutions," such as false statements about the voting process, and works with fact-checking partners, including PolitiFact, to do so. TikTok redirects search and hashtags related to these topics to its Community Guidelines.

The platform has previously applied an automatic label linking its election guide to election-related content and is expected to do so again in the midterm elections. TikTok also makes it harder for people to see content that can’t easily be fact-checked, such as candidates who claim to win before all the votes are counted or rumors about politicians’ health.

Experts say the policies are vague and not equally enforced

Many platforms release updates about their election misinformation policy through blog posts, but they also reserve the right to take down content under a category that isn’t explicitly outlined.

"A lot of times, these policies are pretty vague, whereas the content they're dealing with is pretty specific," said Katie Harbath, the former public policy director for global elections at Facebook. The policies can also be hard to monitor, she said. "There's been many times where all of a sudden, I find out a month or two later the platform quietly updated their policy."

Once users find the policies, it’s still hard to discern what happens when they hit "tweet." And when people don’t know what’s going on, they’re less likely to trust the mission of these rules.

"It’s very hard for platforms to have legitimacy and enforcement if users can’t find their policy, if they can’t understand the policy or if the policies feel ad hoc," said DiResta.

In January, Twitter spokesperson Elizabeth Busby told CNN that Twitter has stopped enforcing the civic integrity policy — its rules about using the platform to interfere in elections, the census or referendums — as it pertains to the 2020 election, because that election is over.

This decision may have consequences for voters in 2022, said Yosef Getachew, media and democracy program director at Common Cause, a Washington, D.C.-based public interest group. (Common Cause supports PolitiFact's Spanish fact-checking in 2022.)

Many people still believe the 2020 election was stolen, and candidates have been sharing that message. "By not combating this, they're helping fuel the narrative that this big lie was accurate, when it's not," Getachew said.

Emma Steiner, a disinformation researcher at Common Cause, said she still sees unmarked tweets falsely claiming that mail ballot drop boxes aren’t safe. (Drop boxes are secure boxes, often placed outside polling sites or government buildings, into which voters can drop completed ballots received by mail. The boxes often have more security features than standard mailboxes and have been used in some jurisdictions for decades).

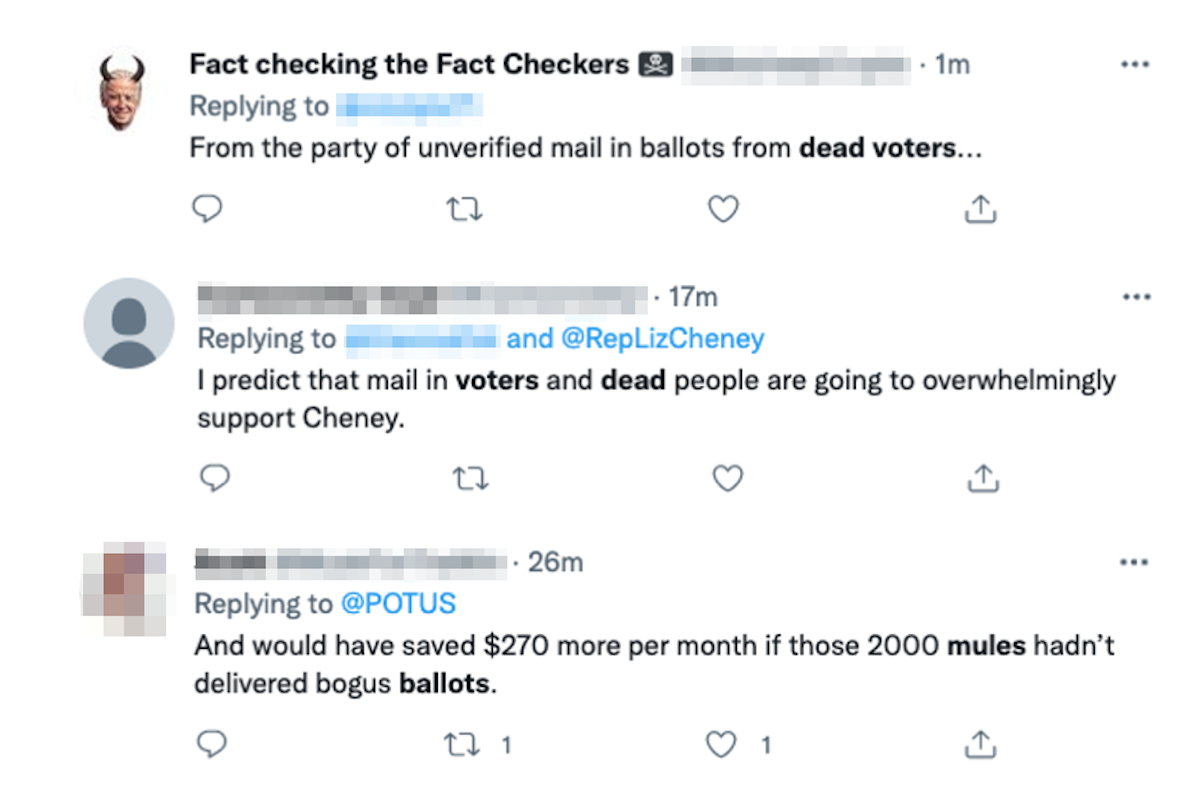

Platforms don’t share data proactively, Steiner said, so it’s hard to gauge exactly how many posts with election-related falsehoods get sent around. It took PolitiFact about 30 seconds in the Twitter search tool — trying terms like "ballot mules" and "dead voters" — to find multiple false claims about elections.

Searching the same terms in YouTube yielded recommendations of news clips mostly debunking the false theories. However, when we looked at channels that put out misleading videos, such as Project Veritas, it was easy to find a widely shared video of false information about voting laws.

YouTube spokesperson Ivy Choi told PolitiFact that content from Project Veritas, a right-wing activist group, "is not prominently surfaced or recommended for search queries related to the 2022 U.S. midterms." The company said an information panel with fact-checks may pop up when users type in terms like "ballot mules" that are widely associated with election fraud. However, these information panels didn’t pop up when PolitiFact searched it.

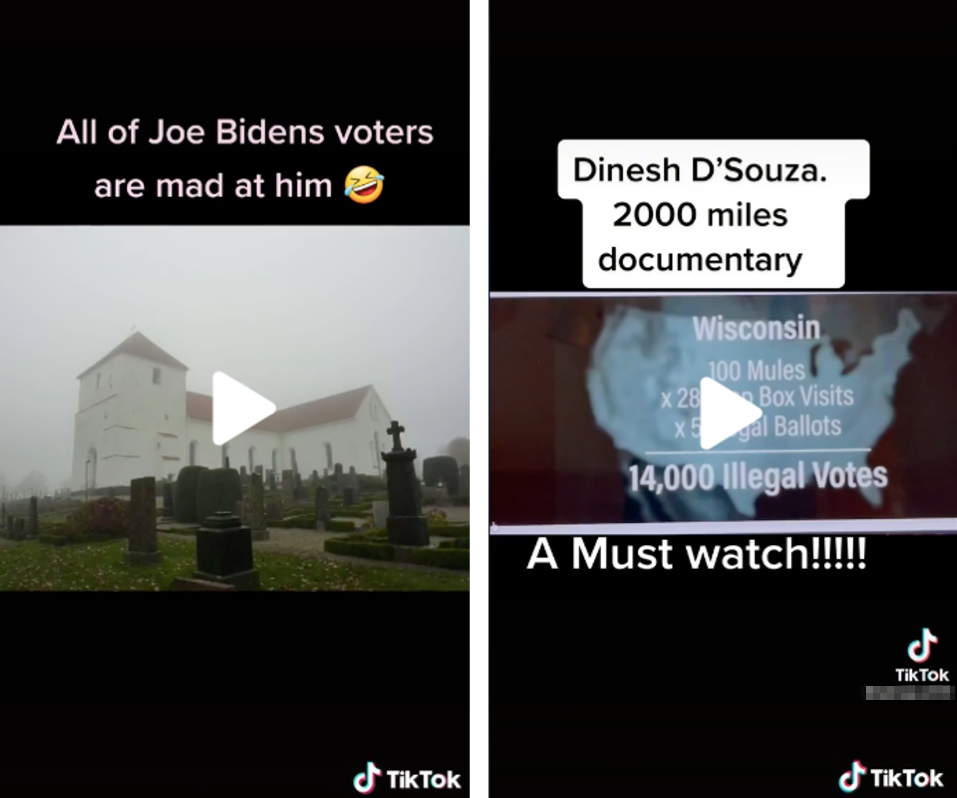

TikTok prioritized PolitiFact’s fact-check on dead voters, but other falsehoods appeared easily after minimal scrolling.

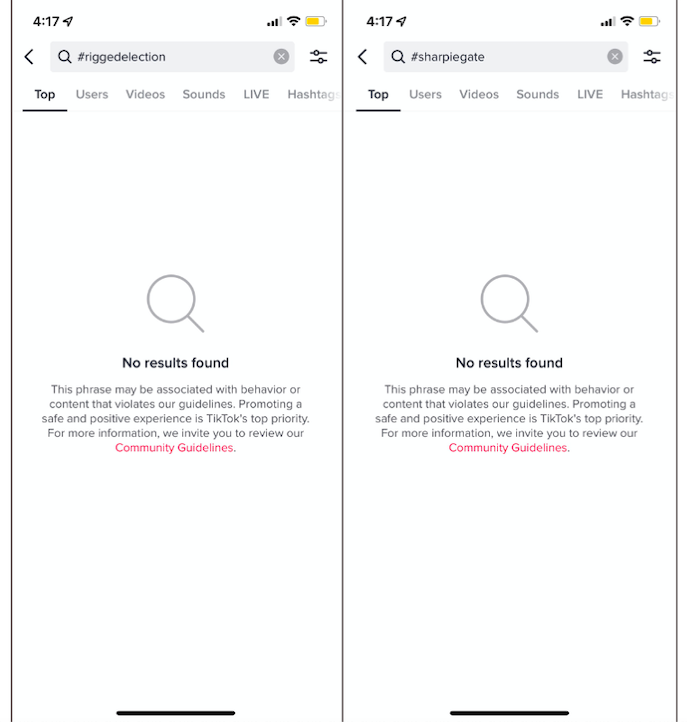

Some election falsehoods, like #sharpiegate and #riggedelection, are unsearchable and link to TikTok’s community guidelines.

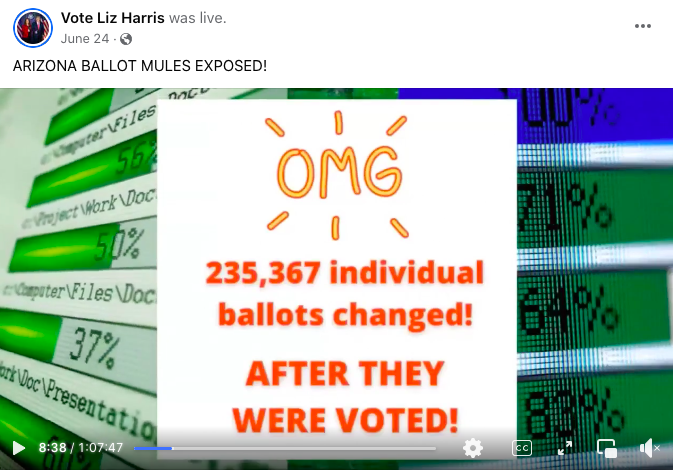

On Facebook, searching "ballot mules" yielded a recent recording of a live broadcast with multiple falsehoods about the 2020 election. One of the accounts that shared it is from a candidate for state representative in Arizona.

The compounding challenges of implementation

Labeling is a popular way for platforms to warn users about misinformation without taking it down. Removing content makes people suspicious, said DiResta, so they can become curious and go searching for it on unmonitored platforms such as Rumble. A label, however, might work better to contextualize the information or say it comes from an unreliable source.

It takes time for fact-checkers to determine whether something is true or not. And while fact-checkers search, misinformation spreads. So, DiResta recommends that platforms provide labels with context about common patterns of misinformation.

"It’s hard. How do you do that at scale?" said Harbath. "It’s a lot more time-intensive, human-intensive to actually fact-check things."

Experts also said it’s hard to measure whether platforms are abiding by their written policies.

"Access to data is super limited, so we don't really get good insight into the full true scope of misinformation that's out there on social media," said Rachel Moran, a postdoctoral fellow at the University of Washington who specializes in how people respond to social media misinformation.

She also said it’s hard to figure out through traditional research methods, such as surveys or interviews, whether fact-checks stop people from believing things that aren’t true. Users don’t always log onto social media for a civics lesson, and Moran said some people go to social media to view problematic content.

"And those people are happy Facebook users, are happy TikTok users, and so they're profitable, so they're (the platforms) not necessarily going to measure something that might undermine their potential profit margin," said Moran.

Emerson Brooking, a senior fellow at the Digital Forensic Research Lab of the Atlantic Council, noted that social media platforms are run by private companies.

"They're not public institutions, even though a lot of what they do is now in the public trust," said Brooking, who specializes in disinformation and internet policy.

How platforms avoid enforcement and users avoid monitoring

Many platforms, including Twitter and Facebook, have newsworthiness or public-interest exceptions, meaning they don’t strike false content from prominent people.

The platforms claim that people should have full knowledge of what their candidates are posting, although DiResta has researched how something being "newsworthy" often depends on whether it’s shared. So, allowing something to be shared because it’s "newsworthy" can be circular logic.

But there are also questions about users’ rights to see the content.

"I'm generally of the viewpoint and have been, even when I was at the company, that people have a right to see what those who represent them or want to represent them have to say," Harbath said. "And I still think a big part of that is true." Harbath said that removing content from the larger platforms often just sends it to smaller, more niche ones.

Getachew has concerns about how platforms decide what is and isn’t newsworthy. He says Facebook and Twitter decide it case by case, so it’s hard for the public or politicians to know what they can and cannot post.

On June 20, Eric Grietens, the former Missouri governor running for Senate, posted a campaign ad about "hunting RINOs" (RINOs is short for Republicans in name only) that violated Twitter’s rules banning violent threats and targeted harassment. Although Twitter limited users’ ability to interact with the tweet, it remains up with a link to the company’s public-interest exception. It’s unclear how Twitter decided how to handle this tweet or what the public interest is.

We are sick and tired of the Republicans in Name Only surrendering to Joe Biden & the radical Left.

— Eric Greitens (@EricGreitens) June 20, 2022

Order your RINO Hunting Permit today! pic.twitter.com/XLMdJnAzSK

"It’s typically human beings making that call, and not every human being is going to read it the same way," said Ginny Badanes, senior director of Microsoft’s Democracy Forward Program.

Last September, The Wall Street Journal reported that a Facebook program called XCheck ("cross-check") exempts millions of famous users, such as politicians and celebrities, from community guidelines.

Since that news broke, the Facebook Oversight Board has forced the company to release a transparency report outlining its decision-making process for XCheck. The report says that before September 2020, "most employees had the ability to add a user or entity to the cross-check list." Now, "while any employee can request that a user or entity be added to the cross-check lists, only a designated group of employees have the authority to make additions to the list." The report does not say who is included in that designated group.

Facebook has other loopholes. Although Donald Trump was banned from the platform for two years following Jan. 6, his official PAC maintains a page.

Civic activists have called on platforms to address the midterms

Those who watch the platforms closely say they seem to see enforcement of misinformation rules before and during elections but less so once elections are over. That creates inconsistency.

"It just keeps raising the question of, ‘Why is there an off-period for civic integrity policies?’" said Steiner

Common Cause and 130 other public interest organizations — including the NAACP, Greenpeace, and the League of Women Voters — sent a letter in May asking platforms to limit election misinformation proactively in 2022. Their recommendations include auditing algorithms that look for disinformation, downranking known falsehoods, creating full-time civic integrity teams, ensuring policies are applied retroactively — i.e., to content posted before the rule was instituted — moderating live content, sharing data with researchers and creating transparency reports on enforcement's effectiveness. (Common Cause has contributed to PolitiFact for fact-checking of election misinformation.)

Getachew says platforms haven’t responded to much to the letter, although Facebook confirmed receipt and gave a noncommittal response. He said Common Cause continues to have internal conversations with the company.

The platforms are making some internal changes. Last year, Twitter launched Birdwatch, a pilot program that lets users create contextualizing "notes" for potentially misleading tweets. Other users then rate the notes, based on their helpfulness.

Common Cause is also advocating for the bipartisan American Data Privacy and Protection Act, which would decrease the amount of data social media companies can collect about users, meaning those users would receive less individualized feeds. The bill passed committee and was sent to the House floor July 20.

Harbath is worried about platforms letting up on misinformation enforcement, but she’s also optimistic. "We are in a much better place since 2016," she said, "There are more tools being built. The public is getting smarter about being on the lookout for mis- and disinfo and how to be a bit more discerning reader."

"I don't think that anybody wants to see any American election, or any election anywhere in the world for that matter, become widely manipulated or delegitimized through the spread of false and misleading claims," said DiResta. "And I think that should be an area where academia, platforms, civil society and just ordinary people agree that it's in our best interest to work together."

RELATED: All of our fact-checks about elections

RELATED: All of our Facebook fact-checks

Our Sources

CNN, Twitter says it has quit taking action against lies about the 2020 election, Jan. 28, 2022

Columbia Journalism Review, "Newsworthiness," Trump, and the Facebook Oversight Board, April 26, 2021

Common Cause, As a Matter of Fact: The Harms Caused by Election Disinformation Report, Oct. 27, 2021

Common Cause, Coalition Letter for 2022 Midterms, May 12, 2022

Common Cause, Trending in the Wrong Direction: Social Media Platforms’ Declining

Congress.gov, H.R.8152 - American Data Privacy and Protection Act, accessed July 27, 2022

Congressional Research Service, Overview of the American Data Privacy and

Disinfo Defense League, Hearing on "Protecting America's Consumers: Bipartisan Legislation to Strengthen Data Privacy and Security," June 14, 2022

Email interview with Ayobami Olugbemiga, Policy Communications Manager at Meta, July 27, 2022

Email interview with Corey Chambliss, Public Affairs at Meta, July 27, 2022

Email interview with Ivy Choi, YouTube spokesperson, July 26, 2022

Enforcement of Voting Disinformation, Sep. 2, 2021

Eric Greitens, Tweet, June 20, 2022

Facebook, Arizona Ballot Mules Exposed

Facebook, Election Integrity at Meta, accessed July 12, 2022

Facebook, Official Team Trump, accessed July 25, 2022

Forbes, Despite Trump’s Facebook ban, His PAC Is raising money through Facebook ads, June 21, 2021

Jan. 6 Committee Hearing, July 12

Journal of Quantitative Description: Digital Media, Repeat spreaders and election delegitimization: A comprehensive dataset of misinformation tweets from the 2020 U.S. election, December 15, 2021

Meta Business Help Center, Fact-Checking Policies on Facebook, accessed July 25, 2022

Meta Newsroom, Meeting the Unique Challenges of the 2020 Elections, June 26, 2020

Meta Transparency Center, Counting strikes, Jan. 19, 2022

Meta Transparency Center, Penalties for sharing fact-checked content, Jan. 19, 2022

Meta Transparency Center, Reviewing high-impact content accurately via our cross-check system, Jan. 19, 2022

Meta, Meta’s approach to the 2022 US midterm elections, accessed July 14, 2022

Mozilla Foundation, Th€se are not po£itical ad$: How partisan influencers are evading TikTok’s weak political ad policies, June 2021

New York Times, As midterms loom, elections are no longer top priority for Meta C.E.O., June 23, 2022

NPR, Trump suspended from Facebook for two years, Accessed July 25, 2022

Politico, The online world still can’t quit the ‘Big Lie,’ Jan. 6, 2022

PolitiFact, How Facebook, TikTok are addressing misinformation before Election Day, Oct. 19, 2020

PolitiFact, New Jersey poll worker did not commit a crime when providing provisional ballot, Nov. 9, 2021

ProPublica, YouTube promised to label state-sponsored videos but doesn’t always do so, Nov. 22, 2019

Protection Act, H.R. 8152, June 30, 2022

Telephone interview with Emerson Brooking, Senior Fellow at the Digital Forensic Research Lab of the Atlantic Council, July 14, 2022

Telephone interview with Ginny Badanes, Senior Director of Microsoft’s Democracy Forward Program, July 25, 2022

Telephone interview with Katie Harbath, former Public Policy Director for Global Elections at Facebook, July 18, 2022

Telephone interview with Rachel Moran, Postdoctoral scholar on misinformation and disinformation at the University of Washington, July 18, 2022

Telephone interview with Renee DiResta, Research Manager at the Stanford Internet Observatory, July 13, 2022

Telephone interview with TikTok Communications, July 26, 2022

Telephone interview with Yosef Getachew and Emma Steiner, Media and Democracy Program Director and Disinformation Analyst at Common Cause, July 21, 2022

The Election Integrity Partnership, The Long Fuse: Misinformation and the 2020 Election, June 15, 2021

TikTok Community Guidelines, Accessed July 26, 2022

TikTok Newsroom, TikTok launches in-app guide to the 2020 US elections, Sept. 29, 2020

TikTok, Election Integrity, Accessed July 12, 2022

Twitter Blog, An update on our work around the 2020 US Elections, Nov. 12, 2020

Twitter Help Center, About public-interest exceptions on Twitter, accessed July 26, 2022

Twitter Help Center, Abusive behavior, Accessed July 28, 2022

Twitter Help Center, Civic integrity policy, October 2021

Twitter Help Center, Defining public interest on Twitter, accessed July 26, 2022

Wall Street Journal, Facebook says its rules apply to all. company documents reveal a secret elite that’s exempt, Sep. 13, 2022

Washington Post, TikTok Is the New Front in Election Misinformation, June 29, 2022

Washington Post, Twitter and Facebook warning labels aren’t enough to save democracy, Nov. 9, 2020

YouTube Help, See fact checks in YouTube search results, Accessed July 27, 2022

YouTube Help, YouTube Election Misinformation Policy, Accessed July 13, 2022

YouTube Official Blog, How YouTube supports elections, accessed July 25, 2022

YouTube, ILLEGAL: NJ election worker: ‘I’ll let you[Non-Citizen/Non-Registered Voter] fill out a ballot, Nov. 3, 2021