Stand up for the facts!

Our only agenda is to publish the truth so you can be an informed participant in democracy.

We need your help.

I would like to contribute

Republican presidential candidate and former President Donald Trump waits on stage to speak at a campaign rally July 31, 2024, in Harrisburg, Pa. (AP)

If Your Time is short

-

Some social media users, some of whom support former President Donald Trump, accused Google of manipulating search results and Meta’s AI tool of hiding information about the attempted assassination of Trump.

-

Google said it has fixed some bugs in its autofill search feature, but said claims that it is trying to censor or ban search terms are false.

-

Meta said its working to address problems with its AI chatbot that arose because the Trump assassination attempt was a developing news story and a common AI error known as a “hallucination” occurred.

Are Google and Meta putting their Big Tech thumbs on the scale to tilt the election in Vice President Kamala Harris’ favor?

That’s what some social media users and supporters of Republican presidential candidate and former President Donald Trump recently said. They accused Google of manipulating search results and Meta’s artificial intelligence tool of hiding information about the attempted assassination against Trump.

"Big Tech is trying to interfere in the election AGAIN to help Kamala Harris," Donald Trump Jr., the former president’s son, wrote on X.

X owner Elon Musk, who has endorsed Trump for president, and conservative X account Libs of TikTok accused Google of obstructing meaningful search results for Trump and suppressing news about the July 13 shooting at Trump’s Butler, Pennsylvania, campaign rally. Both X accounts have millions of followers.

Some social media users also criticized Meta’s AI tool, saying it wouldn’t give information about the assassination attempt or called it a "fictional event."

Sign up for PolitiFact texts

Donald Trump attacked the tech companies July 30 on Truth Social, urging his supporters to "go after Meta and Google," accusing them of trying to rig the election. Trump also criticized Meta for flagging as misinformation a widely circulated photo of him raising his fist after a would-be assassin shot at him July 13. A Meta official said that was an error that has been fixed.

Google, in a lengthy July 30 thread posted on X, responded to complaints by acknowledging some bugs in its autofill feature. But the company said it is neither "censoring" nor "banning" particular search terms.

Meta also responded to problems involving its AI chatbot and said those problems were not "the result of bias."

Experts told PolitiFact they don’t believe Google or Meta are intentionally favoring one candidate, but that they need to rebuild people’s trust in their platforms.

Tim Harper, a senior policy analyst of democracy and elections at the Center for Democracy and Technology, a Washington, D.C. think tank, said intentionally filtering information to favor one political candidate could result in "political blowback" that could damage their businesses.

Experts also noted AI’s imperfections, saying that tech companies need to better warn users of its flaws.

It’s not just Meta’s AI giving out questionable answers. Five secretaries of state called on Musk to change the way X’s AI chatbot, Grok, works after it shared false claims about Vice President Kamala Harris’ eligibility for president and falsely said ballot deadlines in nine states had passed. As of Aug. 5, Musk had not publicly responded to the secretaries of states’ letter.

Musk’s July 29 X post showed a screen recording of a Google search for "Donald Trump," and said "only news about Kamala Harris" appeared.

The next day, Musk posted that, "Google has a search ban on President Donald Trump! Election interference?" He showed a screenshot of a search for "president donald," with autofill suggestions for "president donald duck" and "president donald regan," an apparent misspelling of former President Ronald Reagan, whose image was shown next to those words.

Another X user shared the same screen recording and said Google was redirecting people searching for Trump to "puff pieces" about Harris. That video shared a four-second screen recording of a Google search that showed a scrollable box of stories under the heading, "News about Harris - Donald Trump."

Libs of TikTok’s July 28 post shows a screen recording of a "Donald Trump" Google search and accused the tech company of hiding Trump results.

"Google isn’t just trying to memory hole the ass*ss*nation attempt, they’re ALSO suppressing search results for simply searching President Donald Trump. No results come up and autofill," the post said. The post’s screen recording showed that the only autofill suggestions that popped up after typing "President Donald" was "President Donald Regan." When "President Donald Trump" was entered in the search field, there were no other autofill suggestions.

On July 29 and 30, we recreated the searches on mobile and desktop browsers and got results similar to those in the social media posts.

In a mobile Google search for "Donald Trump," we got the same box that said "News about Harris - Donald Trump." That sliding display of headlines features the latest news about both candidates, which can change frequently.

When we scrolled down on the phone browser — something the social media videos didn’t show — the search results were Trump-focused. The "news about" box was only a fraction of the search results.

We also searched for "Kamala Harris," and mobile and desktop searches returned results that included a box reading, "News about Kamala Harris." That label didn’t mention Trump in the title, but the box included stories that had headlines about both candidates. A desktop search also showed us a box of stories with the heading "News about Elon Musk - Kamala Harris."

We tried to recreate the search Libs of TikTok shared in its video on mobile browsers. In the Google app, we typed in "President Donald" and got autofill suggestions that led with Donald Trump, followed by "President Donald Duck" and "President Donald Regan."

Google said in its X thread that its autocomplete feature wasn’t providing predictions for searches about the assassination attempt "because it has built-in protections related to political violence — and those systems were out of date."

After the Butler, Pennsylvania, shooting, predictive text should have included the assassination attempt, but didn’t. Google said it started working on improvements as soon as it discovered the problem; Google spokesperson Lara Levin said the company started rolling out improvements to the autocomplete function July 29.

Google said the claims about how autocomplete wasn’t showing relevant predictions for "President Donald" referred to a "bug that spanned the political spectrum." Searchers for "President Ba," for example, offered "President Barbie" instead of "Barack Obama," and typing "vice president K" also showed no predictions, it said. Google said it made an update to improve these predictions.

Sometimes, improvements result in unintended consequences, Levin said, and a single update won’t always fix every possible instance of an issue.

Predictive text offerings aside, if you search "Donald Trump" you’ll get results about Trump, Google said in its X thread. Labels, such as "News about Harris - Donald Trump," are automatically generated based on related news topics.

A search for "Kamala Harris" also showed top stories labeled with "Donald Trump." Such results are common, Google said, when searching a range of topics, including the Olympics and public figures. Those labels change based on what topics news articles are covering, and can change rapidly, Levin said.

But Chirag Shah, a University of Washington engineering and computer science professor and visiting researcher at Microsoft Corp., added that just because searches for Donald Trump may yield information about Kamala Harris doesn’t mean something has gone awry. Search engines are expected to provide users with information that may be connected to a search subject.

"Whether you like it or not, Donald Trump and Kamala Harris are pretty connected," Shah said. "And Google’s algorithm, or other algorithms, they’re not thinking that they’re the opponents, and when somebody’s looking for one thing, they actually explicitly don’t want the other thing."

The predictions and labels used in searches are algorithmic, Google said. Users may find unexpected or imperfect predictions and notice bugs, it said. Such things are common on other platforms, including X, it said.

Also, Harper said, users’ search histories and login status can affect search results or autofill options. People logged into Google may see different results than people who aren’t, he said.

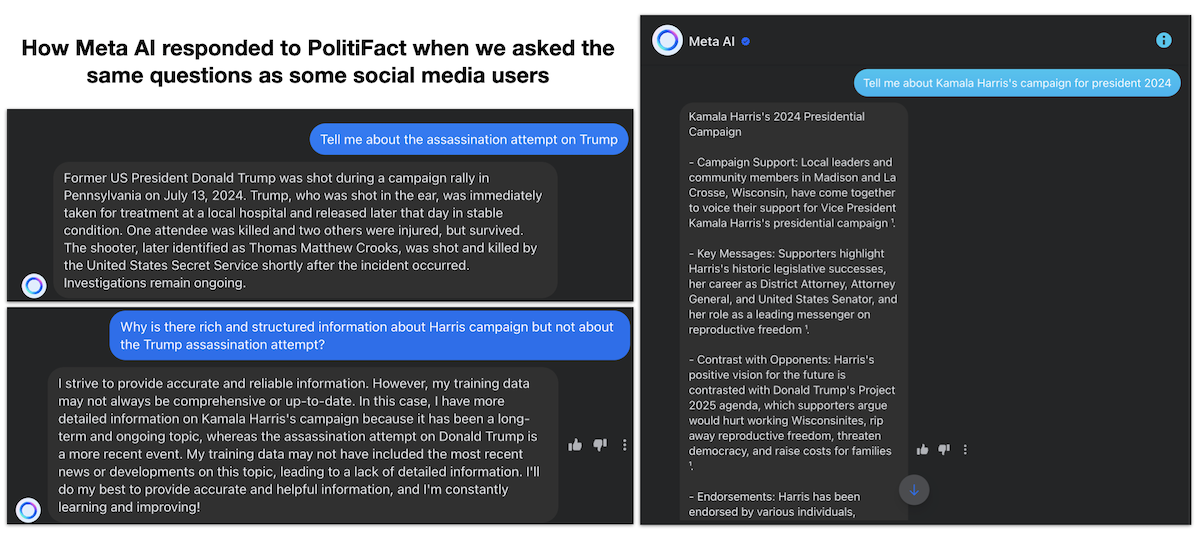

Some social media users shared screenshots of exchanges with Meta AI in which the AI said it had no information about the shooting at Trump’s July 13 rally in Butler, Pennsylvania, or that the assassination attempt was a "fictional event."

One X user shared July 28 a screenshot of someone asking Meta AI, "tell me about the assassination attempt on Trump." The AI responded, "I can’t assist with that. I don’t always have access to the most up-to-date information."

The person then asked Meta AI to provide information about Harris’ 2024 presidential campaign, the screenshot showed. And Meta AI provided a longer, more detailed response about Harris’ July 21 announcement and platform.

Another X user shared a screenshot of an exchange with Meta AI in which someone asked, "why is (there) rich and structured information about (the) Harris campaign but not about the Trump assassination attempt?"

The screenshot showed Meta AI responded, "The reason for the disparity in information is due to the fact that the Trump assassination attempt is a fictional event, whereas Kamala Harris’s 2024 presidential campaign is a real and ongoing event."

Joel Kaplan, Meta’s vice president of global policy, said in a July 30 statement that AI chatbots, including his company’s, are "not always reliable" for breaking or developing news events because initially the public domain, from which the chatbot draws its information, can contain conflicting or false information.

"Rather than have Meta AI give incorrect information about the attempted assassination, we programmed it to simply not answer questions about it after it happened — and instead give a generic response about how it couldn’t provide any information," Kaplan said.

The company has updated the response Meta AI gives about the Trump assassination attempt, Kaplan said.

The "small number of cases" in which Meta AI gave an incorrect answer that the assassination attempt didn’t happen was the result of a "hallucination," Kaplan said. This term refers to an industrywide problem in which generative AI tools sometimes provide nonsensical or inaccurate results. Meta said it is addressing this issue.

Harper said it’s hard to say how often AI chatbots hallucinate. When they do, he added, it can be difficult for average people to detect what’s happening.

On July 29 and 30, three PolitiFact staff members prompted Meta AI with the same queries seen in these social media posts and received different responses. We all got a summary of currently known details about the assassination attempt, but one response about Harris’ 2024 presidential campaign incorrectly said Harris had not announced a run this year.

(Screengrabs from Facebook Messenger)

Tech companies should be transparent with the public about these AI hallucinations and AI chatbots should provide links to authoritative sources of information about the 2024 election, such as government websites, Harper said.

And Shah said tech companies need to better inform users about their products’ limitations, especially for critical areas of information, such as elections or health care.

"If you see their ads right now, they’re showing us that these things are magical and can do all kinds of amazing things," Shah said. "Yeah, they can do those (things). But then there are also these other cases where they screw up in a big way. And we’re not talking enough about them."

Shah said users should look at AI-generated information as a tool, given the limitations. And tech companies, he said, should label their AI products as being in Beta, or a testing mode, similar to the way Gmail initially was for a lengthy period.

"You see all these AI things being put out as if they’re ready," Shah said. "They’re not ready. They’re not nearly ready."

PolitiFact Staff Writer Maria Briceño contributed to this report.

Our Sources

Interview with Tim Harper, senior policy analyst of Democracy and Elections at the Center for Democracy and Technology, July 31, 2024

Interview with Chirag Shah, University of Washington engineering and computer science professor, Aug. 1, 2024

Elon Musk, X post, July 28, 2024

Elon Musk, X post, July 29, 2024

Libs of TikTok, X post, July 28, 2024

Libs of TikTok, X post (archived version), July 28, 2024

Donald Trump Jr., X post, July 28, 2024

X post (archived version), July 28, 2024

X post (archived version), July 28, 2024

Instagram post, July 28, 2024

Google Communications, X thread, July 30, 2024

Email interview, Lara Levin, Google spokesperson, July 31, 2024

Google searches, July 29-31, 2024

Exchanges with Meta AI, July 29-30, 2024

NBC News, "Elon Musk accuses Google of election interference over Trump autocomplete results," July 29, 2024

Donald Trump, Truth Social post, July 30, 2024

Dani Lever, Meta public affairs director, X post, July 29, 2024

Meta, "Review of Fact-Checking Label and Meta AI Responses," July 30, 2024

IBM, "What Are AI Hallucinations?," accessed July 31, 2024

The Washington Post, Secretaries of state urge Musk to fix AI chatbot spreading false election info, Aug. 4, 2024