Stand up for the facts!

Our only agenda is to publish the truth so you can be an informed participant in democracy.

We need your help.

I would like to contribute

People listen to an artificial intelligence seminar at the Forsyth County Senior Center, June 25, 2024, in Cumming, Ga. (AP)

Days after New Hampshire voters received a robocall with an artificially generated voice that resembled President Joe Biden’s, the Federal Communications Commission banned using AI-generated voices in robocalls.

It was a flashpoint. The 2024 election would be the first to unfold amid wide public access to AI generators, which let people create images, audio and video — some for nefarious purposes.

Institutions rushed to limit AI-enabled misdeeds.

Sixteen states enacted legislation around AI’s use in elections and campaigns; many of these states required disclaimers in synthetic media published close to an election. The Election Assistance Commission, a federal agency supporting election administrators, published an "AI toolkit" with tips election officials could use to communicate about elections in an age of fabricated information. States published their own pages to help voters identify AI-generated content.

Experts warned about AI’s potential to create deepfakes that made candidates appear to say or do things that they didn’t. The experts said AI’s influence could hurt the U.S. domestically — misleading voters, affecting their decision-making or deterring them from voting and abroad — benefiting foreign adversaries.

But the anticipated avalanche of AI-driven misinformation never materialized. As Election Day came and went, viral misinformation played a starring role, misleading about vote counting, mail-in ballots and voting machines. But this chicanery leaned largely on old, familiar techniques, including text-based social media claims and video or out-of-context images.

"The use of generative AI turned out not to be necessary to mislead voters," said Paul Barrett, deputy director of the New York University Stern Center for Business and Human Rights. "This was not ‘the AI election.’"

Daniel Schiff, assistant professor of technology policy at Purdue University, told PolitiFact there was no "massive eleventh hour campaign" that misled voters about polling places and affected turnout. "This kind of misinformation was smaller in scope and unlikely to have been the determinative factor in at least the presidential election," he said.

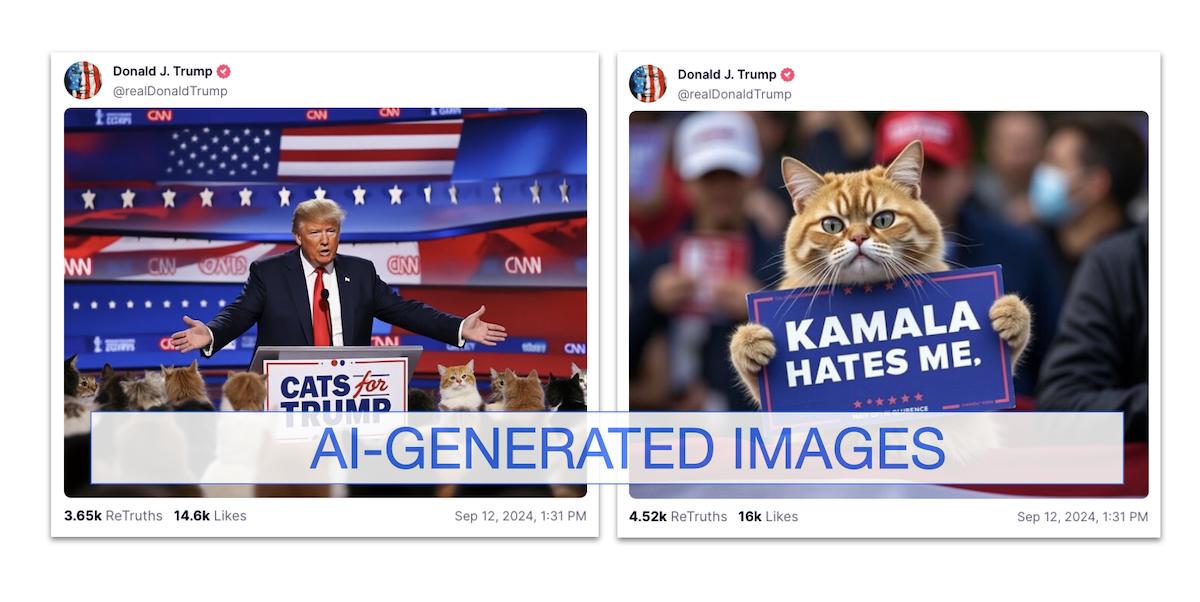

The AI-generated claims that got the most traction supported existing narratives rather than fabricating new claims to fool people, experts said. For example, after former President Donald Trump and his vice presidential running mate, JD Vance, falsely claimed that Haitians were eating pets in Springfield, Ohio, AI-images and memes depicting animal abuse flooded the internet.

(Screenshots from Truth Social)

Meanwhile, technology and public policy experts said, safeguards and legislation minimized AI’s potential to create harmful political speech.

Schiff said AI’s potential election harms sparked "urgent energy" focused on finding solutions.

"I believe the significant attention by public advocates, government actors, researchers, and the general public did matter," Schiff said.

Meta, which owns Facebook, Instagram and Threads, required advertisers to disclose AI use in any ads about politics or social issues. TikTok applied a mechanism to automatically label some AI-generated content. OpenAI, the company behind ChatGPT and DALL-E, banned the use of its services for political campaigns and prevented users from generating images of real people.

Misinformation actors used traditional techniques

Siwei Lyu, computer science and engineering professor at the University at Buffalo and a digital media forensics expert, said AI’s power to influence the election also faded because there were other ways to gain this influence.

"In this election, AI’s impact may appear muted because traditional formats were still more effective, and on social network-based platforms like Instagram, accounts with large followings use AI less," said Herbert Chang, assistant professor of quantitative social science at Dartmouth College. Chang co-wrote a study that found AI-generated images "generate less virality than traditional memes," but memes created with AI also generate virality.

Prominent people with large followings easily spread messages without needing AI-generated media. Trump, for example, repeatedly falsely said in speeches, media interviews and on social media that illegal immigrants were being brought into the U.S. to vote even though cases of noncitizens voting are extremely rare and citizenship is required for voting in federal elections. We rated that claim Pants on Fire, but polling showed Trump’s repeated claim paid off: More than half of Americans in October said they were concerned about noncitizens voting in the 2024 election.

PolitiFact’s fact-checks and stories about election-related misinformation singled out some images and videos that employed AI, but many pieces of viral media were what experts term "cheap fakes" — authentic content that had been deceptively edited without AI.

In other cases, politicians flipped the script — blaming or disparaging AI instead of using it. Trump, for example, falsely claimed that a montage of his gaffes that the Lincoln Project released was AI-generated, and he said a crowd of Harris supporters was AI-generated. After CNN published a report that North Carolina Lt. Gov. Mark Robinson made offensive comments on a porn forum, Robinson claimed it was AI. An expert told Greensboro, North Carolina’s WFMY-TV what Robinson had claimed would be "nearly impossible."

AI used to stoke ‘partisan animus’

Authorities discovered a New Orleans street magician created January’s fake Biden robocall. The magician said it took him only 20 minutes and $1 to create the audio.

The political consultant who hired the magician to make the call faces a $6 million fine and 13 felony charges.

It was a standout moment partly because it wasn’t repeated.

AI did not drive the spread of two major misinformation narratives in the weeks leading up to Election Day — the fabricated pet-eating claims and falsehoods about the Federal Emergency Management Agency’s relief efforts following Hurricanes Milton and Helene, said Bruce Schneier, adjunct lecturer in public policy at the Harvard Kennedy School.

"We did witness the use of deepfakes to seemingly quite effectively stir partisan animus, helping to establish or cement certain misleading or false takes on candidates," Schiff said.

He worked with Kaylyn Schiff, an assistant professor of political science at Purdue, and Christina Walker, a Purdue doctoral candidate, to create a database of political deepfakes.

The majority of the deepfake incidents were created as satire, the data showed. Behind that were deepfakes that intended to harm someone’s reputation. And the third most common deepfake was created for entertainment.

Deepfakes that criticized or misled people about candidates were "extensions of traditional U.S. political narratives," Daniel Schiff said, such as ones painting Harris as a communist or a clown, or Trump as a fascist or a criminal. Chang agreed with Daniel Schiff, saying generative AI "exacerbated existing political divides, not necessarily with the intent to mislead but through hyperbole."

Major foreign influence operations relied on actors, not AI

Researchers warned in 2023 that AI could help foreign adversaries conduct influence operations faster and cheaper. The Foreign Malign Influence Center — which assesses foreign influence activities targeting the U.S. — in late September said AI had not "revolutionized" those efforts.

To threaten the U.S. elections, the center said, foreign actors would have to overcome AI tools’ restrictions, evade detection and "strategically target and disseminate such content."

Intelligence agencies — including the Office of the Director of National Intelligence, the FBI and the Cybersecurity and Infrastructure Security Agency — flagged foreign influence operations, but those efforts more often employed actors in staged videos. A video showed a woman who claimed Harris had struck and injured her in a hit-and-run car crash. The video’s narrative was `wholly fabricated, but not AI. Analysts tied the video to a Russian network it dubbed Storm-1516, which used similar tactics in videos that sought to undermine election trust in Pennsylvania and Georgia.

Platform safeguards and state legislation likely helped curb ‘worst behavior’

A person stands in front of a Meta sign outside of the company's headquarters in Menlo Park, Calif., March 7, 2023. (AP)

Social media and AI platforms sought to make it harder to use their tools to spread harmful, political content, by adding watermarks, labels and fact-checks to claims.

Both Meta AI and OpenAI said their tools rejected hundreds of thousands of requests to generate AI images of Trump, Biden, Harris, Vance and Democratic vice presidential candidate Minnesota Gov. Tim Walz. In a Dec. 3 report about global elections in 2024, Meta’s president for global affairs, Nick Clegg, said, "Ratings on AI content related to elections, politics and social topics represented less than 1% of all fact-checked misinformation."

Still, there were shortcomings.

The Washington Post found that, when prompted, ChatGPT still composed campaign messages targeting specific voters. PolitiFact also found that Meta AI easily produced images that could have supported the narrative that Haitians were eating pets.

Daniel Schiff said the platforms have a long road ahead as AI technology improves. But at least in 2024, the precautions they took and states’ legislative efforts appeared to have paid off.

"Strategies like deepfake detection, and public-awareness raising efforts, as well as straight-up bans, I think all mattered," Schiff said.

Our Sources

Email exchange with Paul Barrett, New York University Stern Center for Business and Human Rights Deputy Director, Dec. 12, 2024

Phone interview with Bruce Schneier, adjunct lecturer in public policy at the Harvard Kennedy School, Dec. 12, 2024

Email exchange with Herbert Chang, assistant professor of quantitative social science at Dartmouth College, Dec. 12, 2024

Email exchange with Christina Walker, Purdue University doctoral candidate, Dec. 13, 2024

Email exchange with Daniel Schiff, assistant professor of technology policy at Purdue University, Dec. 13, 2024

Email exchange with Kaylyn Schiff, assistant professor of political science at Purdue University, Dec. 13, 2024

Email exchange with Siwei Lyu, computer science and engineering professor at the University at Buffalo, Dec. 13, 2024

Federal Communications Commission, FCC Makes AI-Generated Voices in Robocalls Illegal, Feb. 8, 2024

National Conference of State Legislatures, Artificial Intelligence (AI) in Elections and Campaigns, Oct. 24, 2024

U.S. Election Assistance Commission, Al Toolkit for Election Officials, August 2023

New Mexico Secretary of State, AI: Seeing is No Longer Believing, accessed Dec. 18, 2024

Michigan Department of Attorney General, Protecting Michigan Voters from AI-Generated Election Misinformation, accessed Dec. 18, 2024

Vermont Secretary of State, A.I. Deepfakes and Scams, accessed Dec. 18, 2024

PolitiFact, How improperly using AI could deter you from voting, or hurt your health, July 17, 2023

PolitiFact, Fake Joe Biden robocall in New Hampshire tells Democrats not to vote in the primary election, Jan. 22, 2024

PolitiFact, How generative AI could help foreign adversaries influence U.S. elections, Dec. 5, 2023

PolitiFact, ‘They’re eating the pets:’ Trump, Vance earn PolitiFact’s Lie of the Year for claims about Haitians, Dec. 17, 2024

PolitiFact, Birtherism is back, but Kamala Harris, born in California, is eligible to be president, July 22, 2024

PolitiFact, Analysis: How PolitiFact fact-checked 2024 election claims on vote counting, mail ballots, machines, Nov. 26, 2024

Meta, How Meta Is Planning for Elections in 2024, Nov. 28, 2023

TikTok, Partnering with our industry to advance AI transparency and literacy, May 9, 2024

OpenAI, How OpenAI is approaching 2024 worldwide elections, update on Nov. 8, 2024

Al Jazeera, Meta says AI had only ‘modest’ impact on global elections in 2024, Dec. 3, 2024

The Conversation, The apocalypse that wasn’t: AI was everywhere in 2024’s elections, but deepfakes and misinformation were only part of the picture, Dec. 2, 2024

Ho-Chun Herbert Chang, Ben Shaman, Yung-chun Chen, Mingyue Zha, Sean Noh, Chiyu Wei, Tracy Weener, Maya Magee, Generative Memesis: AI Mediates Political Information in the 2024 United States Presidential Election, Nov. 1, 2024

PolitiFact, Trump's claim that millions of immigrants are signing up to vote illegally is Pants on Fire!, Jan. 12, 2024

Forbes, Over Half Of Americans Worried Non-Citizens Vote Illegally, Poll Finds—Despite Little Evidence, Sept. 18, 2024

PolitiFact, Harris’ speech on abortion was likely AI-generated, imposed on a home insurance ad from 2021, Oct. 3, 2024

PolitiFact, ‘Cheap fake’ videos, and the phrase itself, take 2024 election’s center stage, June 21, 2024

PolitiFact, Donald Trump claimed the Lincoln Project used AI to make him ‘look bad.’ But the clips are real, Dec. 19, 2023

PolitiFact, Donald Trump is wrong. These images of Kamala Harris’ Aug. 7 Detroit rally are corroborated, not AI., Aug. 12, 2024

CNN, ‘I’m a black NAZI!’: NC GOP nominee for governor made dozens of disturbing comments on porn forum, Sept. 19, 2024

Internet Archive, CNN This Morning CNN September 20, 2024 2:00am-3:01am PDT

CNN News Central transcript, Sept. 20, 2024

WFMY News, Mark Robinson blames AI for posts in scathing report. But is it possible?, Sept, 20, 2024

The Washington Post, Mark Robinson offers up the 2024 version of the I-was-hacked defense, Sept. 20, 2024

PolitiFact, AI-generated audio deepfakes are increasing. We tested 4 tools designed to detect them., March 20, 2024

The Associated Press, Political consultant behind fake Biden robocalls faces $6 million fine and criminal charges, May 23, 2024

Christina Walker, Daniel Schiff, Kaylyn Jackson Schiff, Merging AI Incidents Research with Political Misinformation Research: Introducing the Political Deepfakes Incidents Database, March 24, 2024

PolitiFact, Fact-check: Trump called Kamala Harris a communist and a Marxist. Pants on Fire!, Sept. 4, 2024

PolitiFact, No, this isn’t an authentic image of former President Donald Trump getting arrested after verdict, May 31, 2024

Rand Corp., The Rise of Generative AI and the Coming Era of Social Media Manipulation 3.0, Sept. 7, 2023

Foreign Malign Influence Center, Foreign Malign Influence (FMI) and Elections, Volume 2, October 2024

Office of the Director of National Intelligence, Organization, Foreign Malign Influence Center, accessed Dec. 17, 2024

Meta, What We Saw on Our Platforms During 2024’s Global Elections, Dec. 3, 2024

Pew Research Center, Americans in both parties are concerned over the impact of AI on the 2024 presidential campaign, Sept. 19, 2024

Foreign Malign Influence Center, Election Security Update as of Mid-September 2024, Sept. 23, 2024

PolitiFact, No proof Kamala Harris injured a person in 2011 car crash. Flawed story comes from unreputable site, Sept. 5, 2024

PolitiFact, Video doesn’t show election worker ripping ballots in a Pennsylvania county. They’re fake ballots., Oct. 25, 2024

PolitiFact, Video shows Haitians who claim they’re voting for Harris in multiple Georgia counties. That’s fake, Nov. 1, 2024

PolitiFact, Not-so-innocent ‘cat memes’: AI creations give life to unsubstantiated Springfield, Ohio, claims, Sept. 25, 2024

PolitiFact, Russian influence operations intensified ahead of Election Day. Officials expect it to continue., Nov. 8, 2024

NBC News, ChatGPT rejected more than 250,000 image generations of presidential candidates before Election Day, Nov. 9, 2024

The Washington Post, ChatGPT breaks its own rules on political messages, Aug. 28, 2023

Time, AI’s Underwhelming Impact on the 2024 Elections, Oct. 30, 2024

National Conference of State Legislatures, Artificial Intelligence 2024 Legislation, accessed Dec. 13, 2024